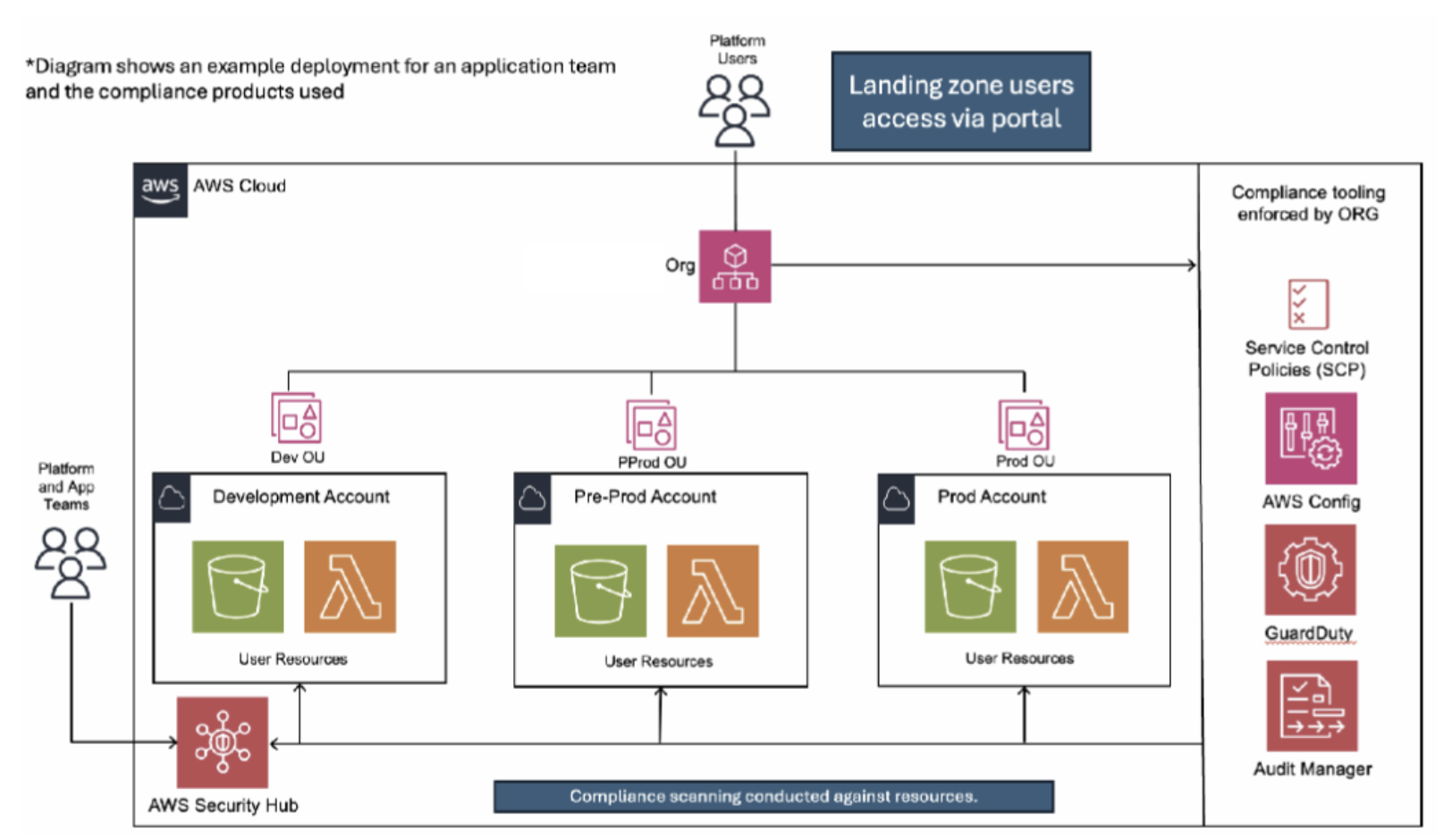

Security & Compliance Enforcement in an AWS Landing Zone

The main goal of this document is to define a method for controlling data and security compliance in an AWS landing zone environment, where many different applications/platform teams are accessing the landing zone. An AWS landing zone environment is already fully configured using AWS Organisations to deploy different components from scratch following the AWS Security Reference Architecture. However, for the purpose of this document we will assume AWS Control Tower has not been used to curate organisation accounts, and instead a custom process is in place as part of AWS Organizations.

Enforcing Security Compliance

This section will outline the specific products and controls that need to be applied to the landing zone to manage and enforce security compliance. It should be noted that each requested AWS service to be used within landing zone should undergo a thorough threat modelling process. As part of this evaluation, a set of preventative or detective guardrails should be defined for implementation. If this process is not successfully completed, no application team should be permitted to use the service.

Typically, there are two modes of enforcing security compliance: detective and preventive. AWS provides native solutions for both modes, however, due to certain limitations with differing AWS products it’s likely both types of controls will be required for different services within the landing zone. Preventive controls are ideal for achieving the best security posture, however due to limitations in the current AWS preventative offering they should be implemented in conjunction with detective guardrails to ensure a robust security control posture across the whole AWS environment.

Preventative Controls

Preventative controls typically refer to mechanisms that explicitly prevent a principal from deploying incorrect configurations. For instance, if a principal attempts to launch an EC2 instance with a public IP address assigned, these controls will block that action. The below sections will expand on suggested solutions, their use cases and any potential drawbacks.

Service Control Policies (SCPs)

SCPs are a feature offered by AWS Organizations that allow you to implement policies that effectively deny specific IAM actions. SCPs should ideally not be used to enforce service level configuration due to sever constraints listed below and instead enforce platform-level controls such as: allowlisting services, networking setup, region- enforcement, use limitation of certain IAM actions which can lead to privilege escalation (passrole, password resets, etc.), MFA enforcement, tagging, use of various audit tools, etc. The below section will cover drawbacks of SCPs:

- The number of allowed SCPs per organisational unit is five. Additionally, each SCP has a character limit. In theory, depending on the number of services in use within organisation's AWS estate, there is a risk that all the required controls for these services will reach the character limit of the SCP.

- Due to the size limitation mentioned above, there is an inherent risk as more services are added to the platform, organisation are forced to only add the most critical guardrails to reduce the size of the SCP. Consequently, this may hinder organisation's ability to effectively control all identified threats in a uniformed way.

- Other AWS offerings such as AWS Config (covered in a later section), are in a much more suited position to monitor resource level configuration. Attempting to add all resource level controls to SCPs will very quickly become cumbersome and difficult to manage.

- SCPs do not enforce any controls at the organization root account, which could create a risk if a threat actor is able to compromise any platform level IAM.

- External accounts are not impacted by SCP policies.

Whilst SCPs are not ideal for enforcing service-level configuration controls, SCPs can and should be used in specific cases when for example, regulatory bodies are enforcing hard deny requirements for certain configuration. An example for this could be a requirement to use customer-managed keys (CMKs) to encrypt data.

AWS Config

AWS Config is a service from AWS that proactively scans all AWS resources and based on a set of user defined rules can report any non-compliant resources as well as attempt auto remediation (only if configured).

Config has been added to this section due to its auto remediation capability, but it should be noted that, it is not technically truly preventative such as an SCP since it can still allow non- compliant resources to be deployed. Given the above information, config should be configured in the following way:

- All identified controls from the threat model process are implemented as config audit rules, with auto remediation enabled where possible. However, care needs to be taken with the auto remediation capability, to ensure no application downtime occurs.

- Following a risk assessment, if required, a resource-level exception for a specific rule should be configured. For example, if a team requires the ability to host a static public website in S3.

- It is important to highlight that a process exists to report configuration rule violations to the relevant application or resource owners, along with a pre- established incentive for addressing these issues.

- Config will be automatically enabled in all child accounts as part of the organisation vending process. Application teams will be unable to disable config due to an SCP that has been enforced by the platform team.

Note that Config will not be added to the audit section to avoid duplication but as described above, it can conduct an audit function.

CloudFormation Hooks or Pipeline Level Controls

Depending on the type of CI/CD process that is in place, controls can be applied to the pipeline to explicitly stop any form of configuration making it to the platform that is not compliant with conducted threat models. However, if these controls can only be applied to the pipeline run there is a risk that a threat actor could bypass the pipeline to make deployments directly into AWS. In this case, it’s imperative to ensure the same controls have also been applied in audit mode via AWS Config.

Detective Controls

Detective controls focus on auditing improper configurations within the platform rather than preventing them. They are typically employed when specific configurations cannot be supported by preventive controls, or as an additional layer of defence when such controls are limited to a pipeline. The organization should enable and enforce the following services, ensuring their use is backed by an SCP.

GuardDuty

GuardDuty is an AWS service that monitors and audits AWS accounts for security concerns or unusual behaviours that could signal a security issue. While it serves as a security monitoring tool, any alerts generated may also suggest that a specific threat has been overlooked in the threat model. If recurring patterns arise with certain alerts, a comprehensive review of the threat model should be undertaken to prevent future incidents and to enhance platform controls.

Audit Manager

Audit Manager is a service offered by AWS where default compliance frameworks, such as AWS CIS and PCI can be applied directly to the AWS account. This gives an instant insight into most AWS resources and their overall compliance state. However, one of the other unique features of audit manager, is that customers can design their own frameworks to be applied to the entire AWS Organization. Audit Manager is specifically suited for validating configuration of services at a large scale and can provide insight into the general security posture of specific services regardless of how they are deployed across the organisation by different teams.

Security Hub

AWS Security Hub is a service which corelates findings from multiple different AWS services that report on security/compliance issues within an AWS estate. This should serve as a central aggregation point of all misconfigured services across the organisation. This will assist both platform security teams and application owners to identify areas of their AWS estate that have active vulnerabilities identified by the AWS services which report findings to Security Hub.

Exception Process

With all the compliance products enabled as described above, there may still be use cases where a team requires an exception from a specific security control. This may be for a variety of reasons like a specific application or platform not functioning correctly if a certain feature is disabled. Teams requesting exceptions must submit a detailed rationale, specifying the necessity and duration. Each request undergoes a rigorous review by the security team to assess potential risks and benefits. Approval is conditional upon implementing compensating controls to mitigate risks. Regular audits ensure compliance and re-evaluation, confirming the exception’s continued necessity.

Since our main AWS product level configuration control method will mostly be AWS Config, any exceptions that need to be granted will require direct modification to the config rule files. Care should be taken to ensure the exception is granted at the most restrictive level. Therefore, any exclusions should be granted explicitly on the Amazon Resource Name (ARN). This will ensure the exception is as specific as possible which should prevent other platform teams or threat actors taking advantage of the overly scoped exception.

Furthermore, exceptions can also be granted to SCPs. For example, IAM:PassRole is considered a dangerous privilege escalation path as it can be used to pass higher privileged roles to resources that a current role can create. However, in the case of pipeline deployment roles, it may be common operation for the higher privileged role to deploy the resource and then pass a more restricted role to the resource for its day-to-day operation. In this case, an exception from an SCP will be required for the specific pipeline roles.

To enhance the developer experience, organisation could establish a disconnected version of the landing zone, free from access to any company data or internal systems. In this environment, most security controls can be relaxed, and any new accounts created would have a 30-day lifespan. This approach would enable teams to test proof of concepts or explore new AWS functionalities that might otherwise be restricted in more heavily regulated settings, with minimal security constraints.

Access Posture

Securing Identity and Access Management (IAM) across the AWS platform is a critical extension of the robust compliance framework already established earlier in this document. If IAM is left unchecked and does not adhere to the principle of least privilege, there is a high likelihood that a gap in our control framework would eventually be uncovered by an administrator role for example. By securing IAM we ensure a robust defence in depth approach to security across the platform.

There are two possible deployment methods that are considered for deploying resources in AWS: ClickOps and Pipeline. ClickOps refers to users logging into the console directly with the intent of deploying resources whereas a pipeline is driven by Infrastructure-as-Code with an accompanying CI/CD system to deploy resources directly into AWS.

Regardless if the environment supports ClickOps or is only deployed via pipeline, the principle of least privilege should be followed and IAM roles only given access to the actions they specifically require. This includes limiting access to only approved services. Additional care should be taken with AWS trust and resource policies to ensure they are not overly scoped which a malicious actor could take advantage of.

ClickOps IAM

If ClickOps is enabled in the environment, it's crucial to ensure that developers and application teams do not hold excessive privileges. All forms of ClickOps in production environments should be restricted, as it can be challenging to recover from incidents without having all resources stored as code.

No IAM users should be in use as they present a significant risk to the platform due to their lack of expiry on CLI keys. Even if the keys are set to expire, they will still live longer than any SSO generated credentials would. SSO should be used in place of any IAM users.

Root logins should be avoided. Root accounts are created automatically when a new AWS account is established and come with extensive access permissions. SSO is properly configured, the root password should not be reset after the account is created, rendering login impossible. SCPs can help restrict the actions the root user can perform on specific services. In child accounts, all actions by the root user should be blocked.

Pipeline IAM

If deployments to AWS are made via pipeline, it’s important to also enforce the principle of least privilege without blocking any day-to-day operation. The best way to achieve this would be to review all IAM permissions and work out which ones will never be required by the pipeline. For example, if users are not allowed to create their own AWS accounts, then this permission should be removed and only granted to platform level pipelines, which are not accessible by any users.

Finally, it’s important to ensure CI/CD accounts are not shared across environments, as it’s much more likely a threat actor could compromise developer accounts due to their testing nature. Separate accounts will ensure that a threat actor cannot jump from development to production using a compromised pipeline account.

Data Classification & Controls

Data classification within AWS can be somewhat complex, as there isn't a comprehensive native data loss prevention (DLP) solution that can both scan for sensitive data and encrypt it, with the exception of Macie, which will be discussed further below. It's advisable for the organization to establish clear policies and standards to guide teams in managing their data appropriately, factoring in any regulatory requirements. For instance, if Highly Confidential data is stored in AWS, it may be required to be backed by an HSM KMS key. AWS Macie offers the capability to scan S3 buckets for specific types of data and can re- encrypt or delete them based on organizational data storage standards. However, Macie's limitation is that it currently supports only S3, which means data stored in other AWS storage mediums may not be compliant or adequately secured.